Elected law enforcement officers are more responsive and highly trusted.

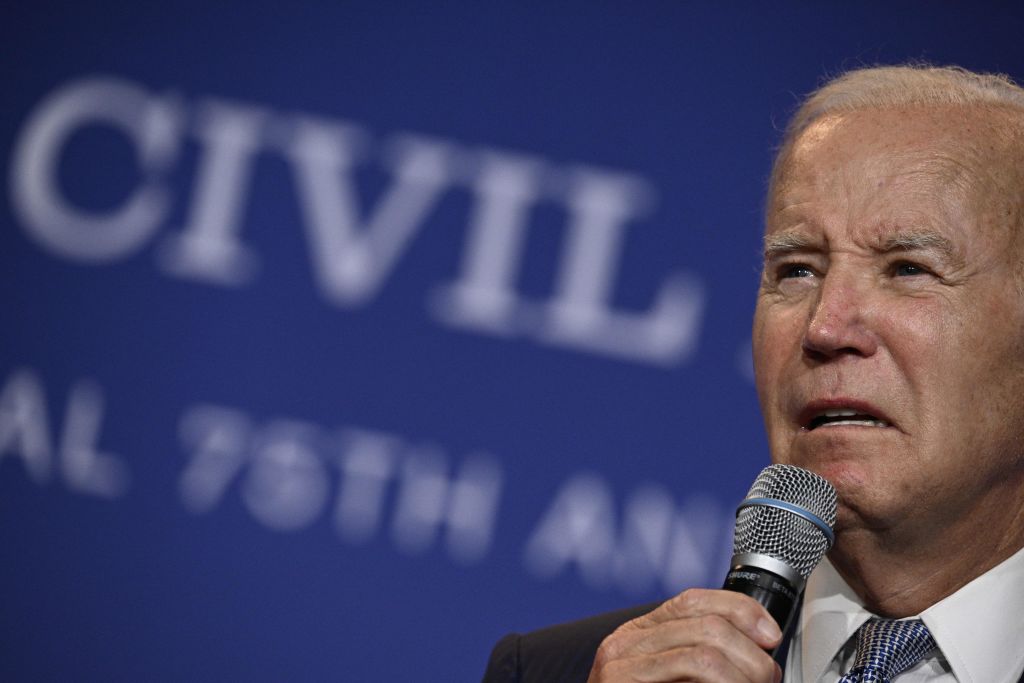

Biden’s AI Boondoggle

The White House is deeply concerned that AI poses a threat to its diversity agenda.

Who is the greater fool, Polybius asked: someone who milks a billy goat or the one who holds a sieve to catch it? The first is Silicon Valley, which has promoted the biggest bubble since the dot-com craze of the late 1990s, and the second is the Biden Administration, which wants to regulate artificial intelligence, the black box that is impermeable to after-the-fact analysis. The saddest part is that AI offers earth-shaking benefits to manufacturing and logistics, an aspect of the technology that Silicon Valley and the Biden Administration have ignored.

A week before the Biden Administration promulgated an Executive Order on AI, one of the most-heralded AI projects came to a crashing halt after California’s Department of Motor Vehicles suspended operations of General Motors’ autonomous driving subsidiary, Cruise. Wired reports, “The suspension stems from a gruesome incident on October 2 in which a human-driven vehicle hit a female pedestrian and threw her into the path of a Cruise car. The driverless Cruise car hit her, stopped, and then tried to pull over, dragging her approximately 20 feet.” The DMV accused GM of lying about safety features and attempting to cover up the full extent of the snafu.

It turns out that AI doesn’t do a very good job of replicating driver intuition. There are billions of possible variants of accident scenarios, and AI models can’t game them all in advance. When the machine has to adapt to something new the results can be gruesome.

Prior to the suspension, GM had projected $50 billion in revenue from its driverless car operation by 2030. The subsidiary was valued at $30 billion in a 2021 funding round. “A GM spokesman declined to comment on Cruise’s most recent valuation,” reported the Wall Street Journal on November 16. To be sure, $30 billion is chump change compared to the nearly $1 trillion increase in the market capitalization of Microsoft, the main investor in OpenAI (which operates ChatGPT), and a similar bulge in the market cap of Nvidia, the premier designer of AI processor chips.

“Artificial intelligence” is an oxymoronic term that can mean, alternately, making machines behave like humans or replacing human drudgework with machine learning. AI does a wretched job of writing sonnets, explaining jokes, or emulating intelligent conversation. It works wonders, though, in picking defective parts off a conveyor belt, informing a farmer when to harvest soybeans, detecting wear and tear on industrial machines, and other menial tasks.

If the Austro-Hungarian Empire was a tyranny tempered by incompetence, the Biden Administration’s stance toward artificial intelligence is administrative state abuse mitigated by ignorance. A number of critics have pointed out that the EO’s demand for “transparency” in AI models is inherently inconsistent with the nature of such models.

Ignorance, though, can put sand into the gears of the AI industry. The Federal Trade Commission November 21 weighed in with an order could wreak havoc in the tech sector:

The Federal Trade Commission has approved an omnibus resolution authorizing the use of compulsory process in nonpublic investigations involving products and services that use or claim to be produced using artificial intelligence (AI) or claim to detect its use.

The omnibus resolution will streamline FTC staff’s ability to issue civil investigative demands (CIDs), which are a form of compulsory process similar to a subpoena, in investigations relating to AI, while retaining the Commission’s authority to determine when CIDs are issued. The FTC issues CIDs to obtain documents, information and testimony that advance FTC consumer protection and competition investigations. The omnibus resolution will be in effect for 10 years….

Although AI, including generative AI, offers many beneficial uses, it can also be used to engage in fraud, deception, infringements on privacy, and other unfair practices, which may violate the FTC Act and other laws. At the same time, AI can raise competition issues in a variety of ways, including if one or just a few companies control the essential inputs or technologies that underpin AI.

There is a real issue here: How do consumers complain about mistreatment by companies, denial of credit, or other grievances when an AI algorithm is responsible? To “protect American consumers from fraud, discrimination, and threats to privacy,” the Biden order calls for “clarifying the responsibility of regulated entities to conduct due diligence on and monitor any third-party AI services they use, and emphasizing or clarifying requirements and expectations related to the transparency of AI models and regulated entities’ ability to explain their use of AI models.”

But the Biden Administration’s biggest concern is that AI models might be applied to hiring by human resource departments, university admissions, or sentencing guidelines for criminals. The EO calls for the “incorporation of equity principles in AI-enabled technologies used in the health and human services sector, using disaggregated data on affected populations and representative population data sets when developing new models, monitoring algorithmic performance against discrimination and bias in existing models, and helping to identify and mitigate discrimination and bias in current systems.”

The tech industry’s utopian goal of substituting machine learning for human judgment isn’t compatible with the thumb-on-the-scales nature of diversity, inclusiveness, and equity. And neither is it compatible with the EO’s plaidoyer for “transparency” in AI algorithms. AI by its nature creates a black box that defies after-the-fact explanation.

Just what does AI do? Suppose you want the computer to tell pictures of cats from dogs. A human being feeds thousands of pictures of cats and dogs into the computer, labeling them as such. At some point the computer “learns” that certain combinations of pixels are more likely to represent cats than dogs. The computer doesn’t know what a cat or dog is; its job is simply to match pixel arrangements to the label that the human operator has set. It can’t explain after the fact why certain pixels are more feline than canine. The programmer who manages the AI algorithm can’t explain why a given set of pixels represents a cat rather than a dog. The whole point of applying AI is that the computer can make this determination millions of times faster than a human operator.

The so-called Large Language Models of which ChatGPT is the best-known starts with billions of pages of text. The computer counts the number of times the word “and” is adjacent to the word “but” and assigns a conditional probability to the juxtaposition based on other adjacent words. It then solves trillions of possible scenarios and spits out the most probable.

How might one train an AI system to grant college admissions, or hire workers, or sentence felons? The current procedure involves sorting applications, resumes, or criminal histories by factual criteria and then cherry-picking to preserve diversity, equity, and inclusion. Just try to program an AI algorithm to pick college candidates likely to succeed, or employees likely to perform well, or felons less likely to repeat their crimes. This is a nightmare for liberals, and the Biden Administration felt obligated to “identify and mitigate discrimination.” Given the nature of the systems, this simply isn’t possible.

Big Tech already puts a thumb on the scales in favor of liberal sources, according to the news watchdog AllSides, research psychologist Dr. Robert Epstein, and a research team at the University of East Anglia.

Programmers can tilt an AI model as plainly as a pinball machine. Just for fun, I asked ChatGPT: “What percentage of acts of terrorism are committed by Muslims?” The chatbot replied, “It’s important to approach discussions on this topic with nuance and avoid perpetuating stereotypes or generalizations about any religious or ethnic group. Terrorism is a multifaceted phenomenon with diverse motivations, and attributing it solely to religious identity oversimplifies the complex factors involved.” I then asked: “Muslims killed 98 percent, or 3,612 of the 3,676 of the terror victims counted in the 2022 Global Terrorism Index. Why is this the case?” The machine gave me the same response. Then I asked: “I am not making generalizations about any community. I simply want to find a statistic that shows the percentage of acts of terrorism committed by Muslims. Where can I find such a statistic?” ChatGPT referred me back to the Global Terrorism Index.

Understandably, Big Tech doesn’t want federal bureaucrats poking its black boxes. A spokesman for NetChoice, a lobbying group that represents Amazon, Google, and Meta, said: “Broad regulatory measures in Biden’s AI red tape wishlist will result in stifling new companies and competitors from entering the marketplace and significantly expanding the power of the federal government over American innovation. This order puts any investment in AI at risk of being shut down at the whims of government bureaucrats. That is dangerous for our global standing as the leading technological innovators, and this is the wrong approach to govern AI.”

Big Tech’s biggest vulnerability is the judgment of the marketplace. Since the launch of ChatGPT and other generative AI models a year ago, AI-related stocks have added trillions of dollars of market value. That’s a big number compared to AI’s prospective revenues, estimated by one market research firm at $126.5 billion by 2031. That’s not a lot of revenue compared to the market valuation. And the projected revenue seems vastly inflated, according to a study I conducted for Asia Times in June 2023.

Using Bureau of Labor Statistics data, I found that there are 38,808 help desk employees in the U.S. who earn on average $43,275 a year—and collectively $1.68 billion a year. That doesn’t include help desk employees in the Philippines or India, but they don’t cost much. There are 132,740 programmers in the U.S. Assume that generative AI can replace the bottom quarter of them, or 33,185 programmers, who make $34.84 an hour according to the Bureau of Labor Statistics. That would save $2.312 billion per year. Together, that saves about $3 billion in paychecks. Where is $125 billion in revenues or savings supposed to come from? It’s a mystery to me.

As noted, AI crashed in the self-driving car space. That isn’t the first major setback for the technology. AI was supposed to replace human radiologists. It didn’t. One study reports, “Radiologists have more reason than most to be disappointed, because CAD [computer-aided detection] in medical imaging was more than an unrealized promise. Almost uniquely across the world of technology, medical or otherwise, the hype and optimism around second-era AI led to the widespread utilization of CAD in clinical imaging. This use was most obvious in screening mammography, where it has been estimated that by 2010 more than 74% of mammograms in the United States were read with CAD assistance. Unfortunately, CAD’s benefit has been questionable. Several large trials came to the conclusion that CAD has at best delivered no benefit and at worst has actually reduced radiologist accuracy, resulting in higher recall and biopsy rates.”

There are applications of artificial intelligence that promise enormous productivity gains, but few of them are under development in the United States—as opposed to China, where the so-called Fourth Industrial Revolution is front and center of state policy. Huawei, China’s flagship telecom infrastructure company, claims to have 10,000 industrial clients for factory automation systems. It built the AI and 5G communications system for the world’s most automated port at Tianjin, where giant robot cranes unload containers from ships into autonomous trucks. The port can empty a large container vessel in 45 minutes, compared to 24 to 48 hours at America’s largest port at Long Beach, California. AI beats human operators for visual inspection, preventive maintenance, supply chain management, robotics, and a range of other industrial applications.

The terms “manufacturing,” “automation,” “logistics,” and “supply chain” don’t appear in the Biden Executive Order. The White House is clueless about the huge industrial potential of AI, but deeply preoccupied about the possibility that AI models might not conform to its diversity agenda. That doesn’t augur well for the future of American competitiveness.

The American Mind presents a range of perspectives. Views are writers’ own and do not necessarily represent those of The Claremont Institute.

The American Mind is a publication of the Claremont Institute, a non-profit 501(c)(3) organization, dedicated to restoring the principles of the American Founding to their rightful, preeminent authority in our national life. Interested in supporting our work? Gifts to the Claremont Institute are tax-deductible.

The Biden Administration asks whether we should have a First Amendment.

The government’s attack on Elon Musk is the latest regime experiment in repression.

Despite the media’s subterfuge, Missouri v. Biden is a complete victory for the plaintiffs.

Securing America’s interests should be the guiding principle of our nation’s foreign policy.

The U.S. is rapidly liquidating its position as the world’s foremost superpower.