Legal marijuana makes America dumber and more violent.

How Expert Worship Is Ruining Science

Big tech and big media are betraying the truth—and the people.

What is science? Has it changed from past to present? Is it still working? The coronavirus pandemic has put a spotlight on the question of science as a whole and biology and drug research in particular.

Now, the popular narrative is that if only we listened to the scientists, we would have prevented this, presumably contrasting scientists against “politicians” and perhaps some un-specified “non-expert” others. The pandemic is happening against a completely unprecedented backdrop of censorship of what seems to me like normal-people discussion about the effects of different drugs and therapies.

The CEO of YouTube has specifically said that the platform would block people suggesting vitamin C has beneficial effects on helping one recover from coronavirus. This is, of course, done “in the name of” science, because everything ought to be done in the name of science in the West.

My current view is that large numbers of fields which are considered “scientific” in the West are a complete mess and lack the essential feature of what it means to be a science in the first place.

So, let’s go back to first principles here. What is a science? If we look at the field of science as a mechanistic process that takes some inputs and produces outputs, what are those inputs and outputs? Let’s take one the of the most classic examples of this: Newton’s theory of gravity.

The theory of gravity, as we learn in middle school, is on its surface a simple formula:

Where the force of gravity is F, m1 and m2 are masses of two objects, r is the distance between them (more specifically their centers of gravity), and G is a gravitational constant. Now this formula needs a few key variables to be known: you need to know the mass of the objects, the center of mass, and the distance between them. Underlying these measurements you may want to know many more things, such as what mass is exactly, what is distance, can gravity be blocked, etc.

Some interesting questions arise: why is the gravitational constant that particular number? Could it be smaller or higher in, say, another universe? But even if all those things are somewhat undetermined, you still have a functional mathematical instrument. Taken together, the notions of mass, distance and a formula, even if somewhat imprecise, comprise a model of reality. This model may not be perfectly accurate, but it does well enough for middle school physics problems.

Why do we care, or why do we need models of reality at all? There is a stock answer that “science helps engineering” and that improves our standard of living. This is true—but how, specifically, does science help engineering? By allowing us to compress parts of the world into a model, it allows reasoning over a model that hopefully translates into reasoning over the world.

Notably, this process is not automatic. It relies on certain assumptions, such as:

- There is, in fact, a model being produced, which is able to create falsifiable predictions about the future.

- The model doesn’t wildly vary over time (it embodies actual compression of universal laws).

- Reasoning over a model is simpler than reasoning over the real world and accessible to many people or algorithms.

- There are clear agreements about what the inputs to the model are and how to measure them.

- The model is over something that we are deliberately engineering, whether social or biological.

- Though some inaccuracies in models are somewhat inevitable and form the basis for new models, wildly contradictory data indicates a problem in either the model or the data.

Clearly the theory of gravity, whether that of Newton or of Einstein, passes the test. Indeed, many things we learn pass the test: most physics, chemical equations, models of the cell, some economic theories. Not every model is strictly mathematical, although the boundary between chemical equations and math may be somewhat artificial. Regardless of that particular distinction, the models are there to help make reasoning easier for people.

It’s worth emphasizing these basic requirements for scientific advancement to be useful in order to highlight a stark contrast between the ideal notion of science and what we have today. The models that scientists produce are meant to simplify and de-mystify reality for other people, including and especially non-scientists. “De-mystify” here does not mean “remove the sense of awe,” as is often the case, but rather remove the mystery of how things work. A good model is one that is both accurate and simple enough that it can be applied by nearly anyone.

Now, given how specialized all the disciplines are, not every person employed in science is able to produce models. There is a lot of work gathering raw data, coming up with theories, writing up grants, teaching existing material, etc., etc. The specialization means a lot of people work in science, but not that many look at the big picture: what is the output we are producing, especially in the realm of health and human sciences?

Stating the Obvious

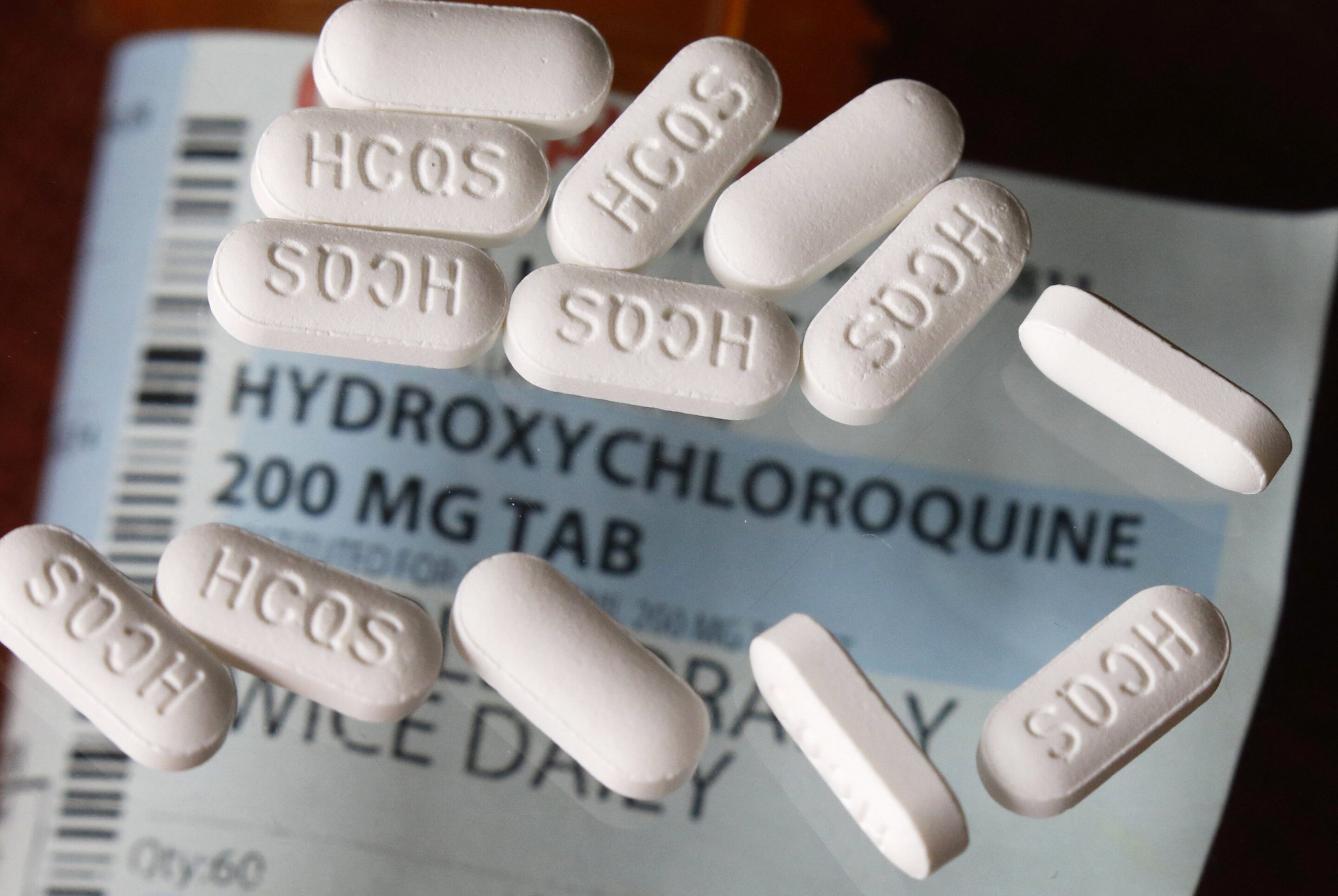

What we are producing instead of models are statements. Statements such as: a particular drug (e.g., Vitamin C/Vitamin D/HCQ) helps or does not help against the flu/coronavirus/scurvy. Now these statements are not useless, despite not being full models of reality. If they are true and we have good consensus on them, even without necessarily understanding the mechanism or models of actions behind them, they can still form useful guidelines for action on a deadly disease.

However, the question is: do they? What exactly does the science say and, just as importantly, when does it say it? This is a tricky question, often replaced in popular media by “what do the journalists say the science says?”—which is yet another link further removed from the truth.

Let me give a hypothetical example. Let’s say that I create an experiment with a control group and treatment group, each containing one person. I give the treatment group (one person) a drug and watch how both groups develop. Let’s say they both get better around the same time. What can we conclude?

If you are a follower of the scientific method, you might say that the sample size is too small to conclude anything. If you know statistical terminology, you might say that the experiment lacks “statistical power” for a negative proof of drug efficacy, which is a more precise way of saying the sample size is too small. Regardless, you might say that more data is needed.

However, according to modern journalistic “standards” in America, this study is definitive proof that the drug does not work, especially if to say so accomplishes some political goal. Of course, nobody does experiments of 2 people, but sometimes journalists do report on experiments of 30 people, which still lack power.

Even in the space of COVID and HCQ, there are plenty of other issues. One famous study of veterans features a whole gamut of issues, such as strange subgroup selection, observational studies passed off as control-based ones in media, bizarre hazard ratios that supposedly indicate that AZ + HCQ is much better than HCQ, but without further discussion of conclusions. Another Lancet study features a lot of those same problems, along with potentially being completely made up.

Our media and state epistemics—drug either=good (perfect cure) or bad (extremely harmful)—are completely incapable of handling issues as complex as “dosage” or “network effects” or person-dependent treatment.

This brings us to a philosophical question of what “evidence” is, what “evidence” should be, and how the term is used in practice. If you are a detective in a case, literally everything is likely evidence: how the person died, what the weapon was, where the people who knew him were on the night of the murder, what drugs they were normally taking. Now some of this, though it can be construed as evidence during the investigation, ends up not mattering at the end. However, until the judgement is done, it’s best not to dismiss particular things as not “evidence.”

In the view of a real scientist, everything is evidence—it is just very unclear how to interpret it. Science is about trying to explain reality. So, if we have two conflicting studies on a drug efficacy, there are many possible explanations here.

One we implicitly adopt, as a polarized society, is that one of the studies was done badly. While the general quality of studies done under the pressure of a pandemic can be assumed to be low, we also need to consider obvious other hypotheses, such as the drug working better for different people. For example, it’s a very plausible situation that the drug works better if we give it to people earlier in the disease. However, combining our desire to “help the worst off” with studies that follow the worst-infected people due to an easier time getting the drugs for them, makes us study efficacy on the people who are already really sick and might not be helped.

Now sometimes, these might be less strong “evidence” than a well-done RCT (even that is not guaranteed due to plausible network effects). But the issue here is that instead of considering “stronger” and “weaker” evidence, we consider one to be absolute truth and the other heresy. Instead of calling these things “evidence,” we have laid a claim on the term “evidence” as what journalists say are “studies,” which may or may not be RCTs, which may or may not be done or interpreted correctly.

“Evidence,” however important it is, is not the full story of science, which once again needs to produce models. How does the drug operate? Where does it operate? If one is doing an observational study, it’s worth asking what exact factors influence doctors to prescribe one vs. not, instead of simply throwing controls at the data until the desired outcome occurs.

Statements such as “the drug has a 20% chance of helping the disease” are seen as an “endpoint,” rather than a description of something we don’t know: what distinguishes those 20% from the 80%. The probability is not an “inherent” thing, but rather a pointer to missing puzzles in the causal graph. What we actually need is to work toward these causal graphs.

“Silence, Layman!”

Tech censorship of large amounts of info relating to the virus, including early videos from Wuhan, has been downright criminal. Big Tech has fully bought into the frame of “experts” vs “laypeople” as if experts are always “correct” and laypeople are “wrong,” (unless they are repeating a statement by the experts).

If the laypeople are “wrong,” then there has been a massive failure of education to produce correct models for people to use for home reasoning. Obviously, the default answer for many people is to simply pour more money into education. But we lack understanding of what science and science education even are. Science is meant to produce world models, and education is supposed to impart them to everyone else. Neither is doing this, really, and what we have in place of those instructions is one large uncanny valley of ever-changing statement production (how dangerous are public gatherings, really?).

Now, more abstract statements such as “Vitamin D/C helps with respiratory diseases” are what we would ideally have produced before the pandemic. Those can be operated on by laypeople and form a reasonable set of priors to be further refined when something new shows up. The original purpose of science is to produce functional information that can be operated on by both policy makers and laypeople. Said information can be shared by people who are themselves not scientists.

However, in our backwards society the idea of people coming to conclusions using previously available evidence is becoming taboo. This makes just about as much sense as tech companies censoring the correct calculation of gravity between two planets because the calculation wasn’t done by a scientist.

That’s not to say that all science needs to be public. There are good reasons for secrecy, such as scientific research into advanced weapons. However, all science that is public is there to empower people, not put them down. Not all science models need to be understandable by every person—sometimes models are too hard to understand and are thus more suited for use in a public open-source algorithm.

Again, that’s not to say that all censorship is unjustified. There can be harmful misinformation, but one key role of any future academy must be to define a formal Overton Window for the set of hypotheses that are “within” the realm of scientific discourse. If it’s a plausible hypothesis for an experiment, it’s likely plausible to be discussed in public.

What we have instead is a “debate” over HCQ, where academics are publishing papers conflicting with each other and doctors are giving people the drugs and reporting the results, before promptly being blocked by Big Tech on the grounds of being “unscientific.”

The debate over HCQ has both sides thinking the other is killing people. One side happens to be right. History will not judge those who were wrong on this very kindly. The American ideal of a citizen informed enough to make decisions in the voting booth is in direct contrast with a citizen who needs to be disciplined for making obvious logical conclusions about vitamins and virus relationships.

There is even an ironic, 1984-esque doublethink in the usage of statistics. You see, when society needs to make intelligent mathematical analysis happen, it will happen. Some teams at Microsoft, my previous employer, are masters of statistical analysis of both the Bayesian and the frequentist variety. If our society needs the truth about important things, such as how to make people click on ads, good honest statistics will be allowed to happen sometimes. But if we want to figure out whether a drug has a positive cost benefit for one’s health—forget about it.

Other sciences suffer from major problems as well. You can tell a particular field has issues by asking, “has society gotten better or worse on the factors studied by the science? And have we done so by looking at official advice or ignoring it?” The obvious example is nutrition. Obesity, as well as a host of other nutrition-related problems, has increased in America. If the entire nation is failing, then how good can the scientific establishment of nutrition be?

Of course, one could go paper by paper and see where individual publications make errors of judgment, but they are likely to fall into predictable categories: absence of actual models, lack of causal graphs, inappropriate controlling for variables. The problem here is that the science is failing as a whole, or we would not be having the health problems we are having. Understanding nutrition as a flawed science is perhaps one of the hallmarks of someone who is an intellectual versus someone who’s just pretending.

None of this is truly groundbreaking or new. I have heard stories of professors at Yale in one department being mad about professors in another department teaching p-hacking to students. Inter- and intra-discipline fights are common, which isn’t necessarily a bad thing as long as the overall combined output of the field is correlated with reality. However, the journalistic tendency to signal boost any paper that can have political impact amplifies some fights over others, further screwing up an already shaky system.

A New Birth of Reason

So right now science is losing its status among the general population. It is also losing status among those who can actually read statistics. This is both horrifying and encouraging. Without a structured way to sift actual reality into social reality, the social reality will diverge from reality, with further and further breakdown of health and sanity for society.

This is true both for academics and non-academics. The anti-science polarization does not necessarily arrive at the truth, as it is subject to its own signaling spirals. But it at least provides some counterpoint to the bizarre hegemony of ideas such as “if the disease doesn’t have a cure, then all attempts to establish helpful drugs must be banned”—the implicit philosophy of the tech sensors.

There are deeper problems than just the type signature of what is being produced or false assumptions about what is “evidence,” “information,” or “misinformation.” Each discipline of science generally has an incentive to ensure that their discipline grows and doesn’t get incorporated into other disciplines. If, for example, it is found that large aspects of mental illness are based on particular known social or nutritional problems, then the mental illness industry could become dissolved into a social science or some biological science. This is disadvantageous to the industry, and thus the industry is disincentivized to uncover causality of the concepts underlying itself.

True foundational research in a scientific discipline does not look to expand said discipline, but rather collapse it, just like a good engineer automates himself out of a particular task. However, our general epistemic foundation keeps coming up with a large number of conceptual ideas as “things-in-and-of-themselves” with independent identity disconnected from causality.

These “things-in-and-of-themselves” then find their way into identity, Twitter bios, and politics, closing the feedback loop of establishing social reality, complete with the social prohibition: “do not ask what causes this, that’s unscientific!” This is a key problem of social order that eventually leads to the destruction of social order and civilization as a whole, all under the name of “science.” It has been noted by many philosophers, yet no society has ever come up with a lasting solution.

For example, even something like “climate” is now a “thing-in-and-of-itself”, which means it can only be either “good” or “bad” along with an established set of possible actions that are allowed to affect it. Of course, one could ask a more precise question, such as “if we cut down more trees on the west coast, would we have less intense forest fires there?” without trying to invoke the “climate” as a whole. However, this is taboo, since “climate” is sacred and asking questions about parts of the sacred is taboo.

Is there still hope that we can convince the current “scientific establishment” to get back to the roots? I don’t have much. Reform will more likely be driven by a cadre of people who don’t think of themselves as “scientists,” unless in jest, but who have rapid enough feedback cycles from reality to begin to notice previously hidden patterns in it.

The future of science, as the Russian proverb goes, is its well-forgotten past: something that is produced by experts, but either understood by the common man or precise enough to be understood by a computer, as long as all the steps are verified. The future of science is models, not statements, and causal diagrams rather than frequentist nonsense of chasing p-values and correlations. Whether or not this will come under the banner of “science” remains to be seen.

The American Mind presents a range of perspectives. Views are writers’ own and do not necessarily represent those of The Claremont Institute.

The American Mind is a publication of the Claremont Institute, a non-profit 501(c)(3) organization, dedicated to restoring the principles of the American Founding to their rightful, preeminent authority in our national life. Interested in supporting our work? Gifts to the Claremont Institute are tax-deductible.

Lab leak discourse disaster, searching for meaning in Chinese propaganda, and an uncomfortable question on the topic of trust.

Our useless ruling classes are making a hard decision way, way worse.

Somber headlines belie common facts

Their panic continues.

The FDA Commissioner's embarrassing lecture on 'misinformation.'