Our unruly digital environs are frightening, but they’re better than total bureaucratized control.

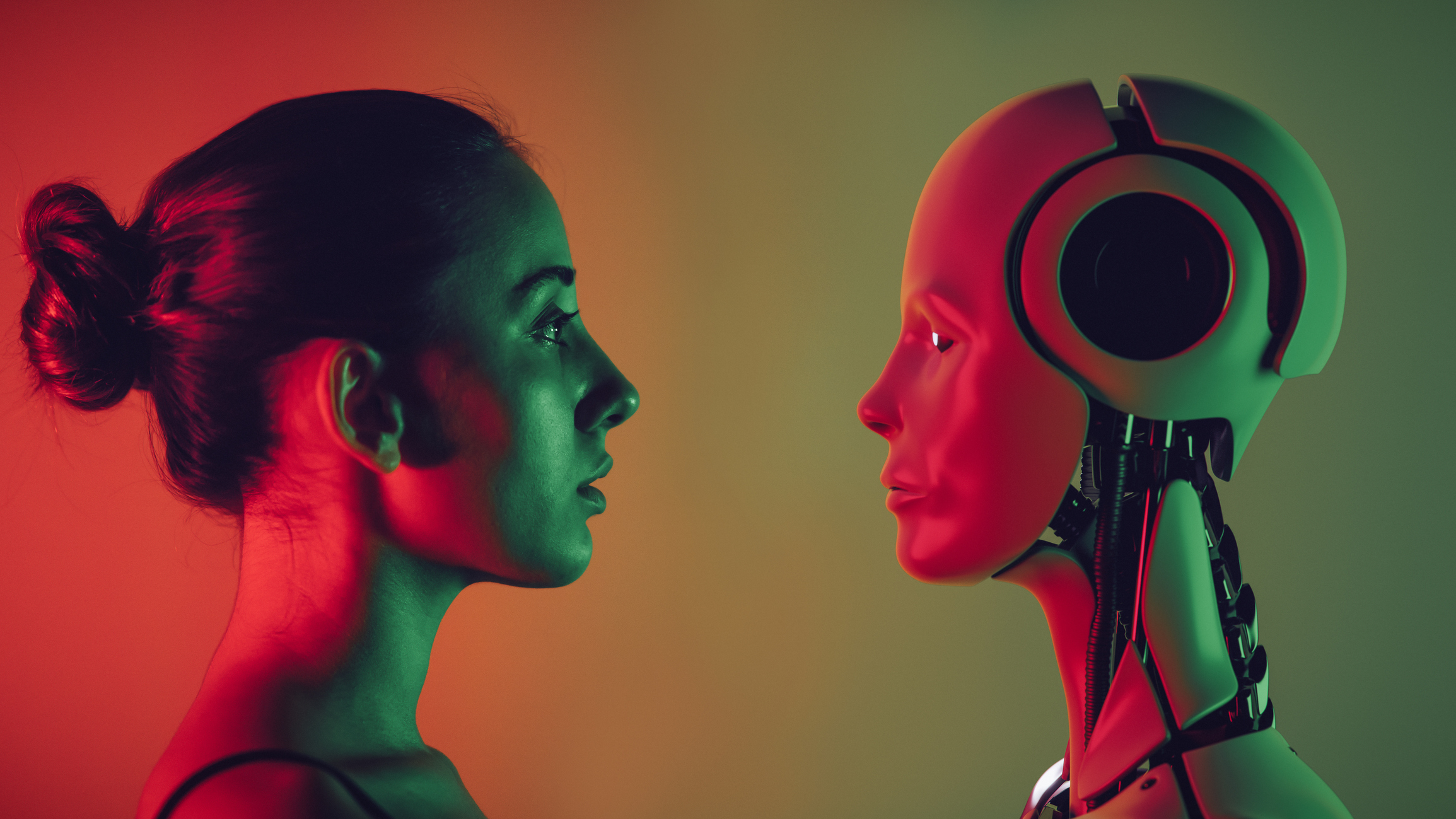

Black-Boxing Democracy

Putting technology ahead of humanity in decision making will end democratic self-rule.

The following is adapted from testimony presented before the United States Senate Committee on Homeland Security & Governmental Affairs on May 16, 2023, on the topic of “Artificial Intelligence in Government.”

Throughout history, warfare has spurred the development of transformative new technologies. My experiences in the War on Terror provided me with a glimpse of the AI revolution that is now remaking America’s political system and culture in ways that have already proved incompatible with our system of democracy and self-government and may soon become irreversible.

I first encountered issues of artificial intelligence and governance when I was deployed to Western Afghanistan in 2012. What I found there was that, in the midst of a great deal of confusion and ambivalence about the U.S. mission and what we were still trying to accomplish after a decade in Afghanistan, the military had turned to powerful new information technologies to fill the strategic void.

On critical fronts like defeating the Taliban and standing up the Afghan security forces, our success remained illusory. But in the face of this systemic failure and stagnation, the U.S. developed a special capacity for building databases.

The theory of data-driven warfare was that by collecting enough information and marrying it with the proper algorithms and AIs that could perform “predictive analysis,” one opened a technical portal into the future. We could stop the next IED attack or ambush before it occurred, control events on the ground, and win over the Afghans to our cause.

It did not work out that way. Years before the U.S. withdrawal and the Taliban’s return to power, I had come to see that the gap between our official metrics of success and the reality on the ground was not only a result of measuring the wrong things. By translating critical questions of politics and policy into the language of data, we had outsourced the most fundamental responsibilities of statecraft to machines while rendering the essential notions of war, victory, and peace obscure to America’s leaders.

AI moves us at exponential rate from obscurity to the impenetrable darkness of the so-called “black box.” As the computer scientist Stephen Wolfram noted when he testified before the Senate in 2019, “If we want to seriously use the power of computation—and AI—then inevitably there won’t be a ‘human-explainable’ story about what’s happening inside.”

America was founded on the ideal that individual citizens, through their free and informed actions, should participate in their government. But for a free people to be participants in the making of their own laws and the meaning of their own lives, they must be knowledgeable about the world around them. Centralized applications of AI that invisibly alter the architecture of perception and reality—for instance by performing mass censorship of certain phrases or narratives—make such knowledge impossible. Moreover, as the writer James Poulos notes, the AI approach to governance undermines individuals’ faith in their own capacity for reasoned action because it is “driven by a logic of seeing technology as better and stronger than humanity.”

In other words, a technology that is intrinsically threatening to human interests with a potential transformational power on the order of the printing press or the wheel, which is at this moment being funded and deployed by multiple government agencies, currently functions like an alien life form that appears destined to move even further away from human understanding the more it progresses.

And yet, there is no chance that the U.S. government and U.S.-based corporations are going to abandon a technology this powerful. Nor would such an outcome necessarily be desirable, given that it would cede the space to competitors like China.

We seem to be caught in a trap. There is a vital national interest to promote the advancement of AI. Yet, at present, the government’s primary use of AI has been as a political weapon to censor information that it or its third-party partners deem harmful.

Examples abound from recent years of this kind of AI-driven informational control system, which is deployed at every opportunity in the name of public safety and emergency.

And it is in the name of safety that government officials are now calling for even more control over AI.

Earlier this month, Jen Easterly, the director of the Cybersecurity and Infrastructure Security Agency, called for more regulation of AI, warning that, “We need to be very, very mindful of making some of the mistakes with artificial intelligence that we’ve made with technology.”

But regulating AI so that it becomes an even more powerful tool of censorship for enforcing party orthodoxies will increase neither our safety nor our security.

Easterly also recently argued that “China has already established guardrails to ensure that AI represents Chinese values, and the U.S. should do the same.”

While emulation of the Chinese model of top-down party-driven social control does appear to be the direction that AI and governance are moving in the U.S., I would submit respectfully that continuing in that direction will mean the end of our tradition of self-government and the American way of life.

The American Mind presents a range of perspectives. Views are writers’ own and do not necessarily represent those of The Claremont Institute.

The American Mind is a publication of the Claremont Institute, a non-profit 501(c)(3) organization, dedicated to restoring the principles of the American Founding to their rightful, preeminent authority in our national life. Interested in supporting our work? Gifts to the Claremont Institute are tax-deductible.

An English literature professor describes how he came to love teaching Freshman Comp.

Addressing the harms of persuasive technology